If you’re here, you’re probably experiencing a common issue: trying to access a certain port on an EC2 Instance located in a private subnet of the Virtual Private Cloud (VPC). A couple of months ago, we got a call from one of our customers that was experiencing the same issue. They wanted to open up their API servers on the VPC to one of their customers, but they didn’t know how. In particular, they were looking for a solution that wouldn’t compromise the security of their environment. We realized this issue is not unique to our customer, so we thought a blog post explaining how we solved it would be helpful!

To provide some context, once you have an API server within your VPC, it is closed to the outside world. No one can access or reach that server because of the strong firewall around it. There are a few ways around this, including Virtual Private Network (VPN) connections to your VPC, which allows you to open up private access. Unfortunately, this is not a viable solution if you need to open up your API server to the world, which was the case with our customer. The goal was to provide direct access from the internet outside the VPC for any user without VPN connection.

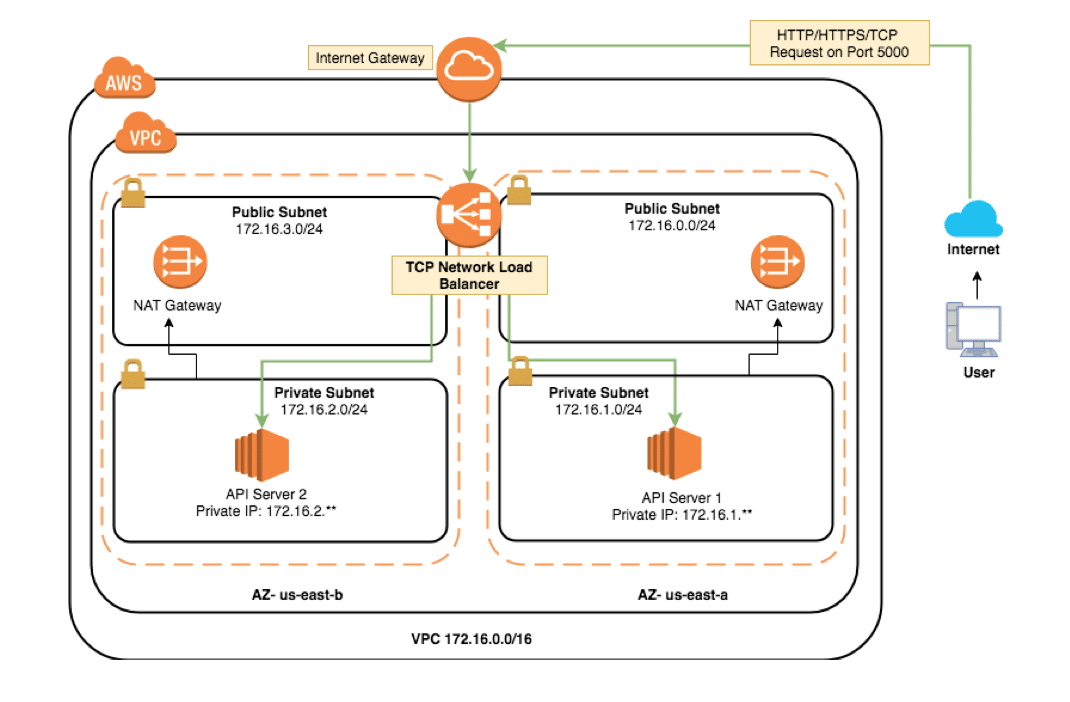

In order to solve this issue for our customer, one of the architecture changes we recommended was adding an internet-facing AWS TCP Network Load Balancer on the public subnet of the VPC. In addition to this load balancer, we also needed to create an instance-based target group.

Keep reading to learn how you can do this – we even included an architecture diagram to make things easier! Please note that our example includes fake IP addresses.

Problem: Accessing an API endpoint in an EC2 Instance in a Private Subnet from the Internet.

Suggested AWS Architecture Diagram:

Features of our diagram:

- Multi AZ: we used a private and public subnet in the same VPC in two different availability zones.

- Multi EC2 (API Servers): we deployed an API server in each private subnet in each availability zone.

- Multi NAT Gateways: a NAT gateway will allow the EC2 instances in the private subnets to connect to the internet and achieve high availability. We deployed one NAT gateway in the public subnets in each availability zone.

- TCP Load balancer health checks: a TCP load balancer will always redirect any user’s requests to the healthy API servers. In case one AZ goes down, there will be another AZ that can handle any user’s requests.

Although we did not make this change, you can also implement Multi-Region to handle a region failure scenario and enable higher availability.

VPC Configurations:

| Subnet | AZ | CIDR | IGW Route Out | NAT GW

Route Out |

| public-subnet-a | us-east-1a | 172.16.0.0/24 | Yes | No |

| public-subnet-b | us-east-1b | 172.16.3.0/24 | Yes | No |

| private-subnet-a | us-east-1a | 172.16.1.0/24 | No | Yes |

| private-subnet-b | us-east-1b | 172.16.2.0/24 | No | Yes |

EC2 Configuration:

| Name | AZ | Subnet | Private IP | Security Group |

| API Server1 | us-east-1a | private-subnet-a | 172.16.1.** | Allow inbound traffic to TCP port 5000 from 0.0.0.0/0 or any specific source IP address on internet. |

| API Server2 | us-east-1b | private-subnet-b | 172.16.2.** | Allow inbound traffic to TCP port 5000 from 0.0.0.0/0 or any specific source IP address on internet. |

Solution:

- Create a TCP network load balancer:

- Internet facing

- Add listener on TCP port 5000

- Choose public subnets with same availability zone (AZ) as your private subnets

- Create an instance based target group:

- Use TCP protocol on port 5000

- For health check, either use TCP on port 5000 or HTTP health check path

- Add the two API servers to the target instances to achieve high availability and balance the request load between different servers

- Once the target instances (API servers) become healthy, you will be able to access the API endpoints from the public internet directly using the new TCP load balancer DNS name or elastic IP address on port 5000

References:

https://docs.aws.amazon.com/elasticloadbalancing/latest/network/create-network-load-balancer.html